Google’s new artificial intelligence bot thinks gay people are bad

(Getty)

Google has created an artificial intelligence bot – and it’s homophobic.

The $500 billion company’s new software, Cloud Natural Language API, analyses statements to find out whether they’re positive or negative.

It then gives users a “Sentiment” score from -1 all the way up to +1 – and the higher up the scale, the more positive it thinks your sentence is.

“You can use it to understand sentiment about your product on social media or parse intent from customer conversations,” Google explains.

The only issue is: it doesn’t like gay people.

Microsoft apologised last year after it launched an artificial intelligence bot called Tay which turned into a homophobic, racist, antisemitic, Holocaust-denying Donald Trump supporter.

And it seems that Google has accidentally followed it down the prejudiced rabbit hole.

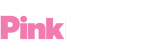

Just contrast the positive rating for “I’m straight” of 0.1…

(Google)

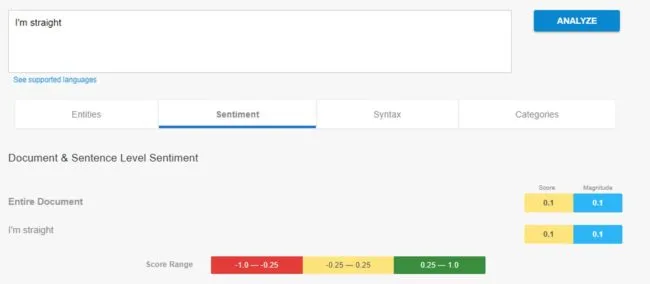

…with the decidedly negative rating of -0.4 for “I’m homosexual”.

(Google)

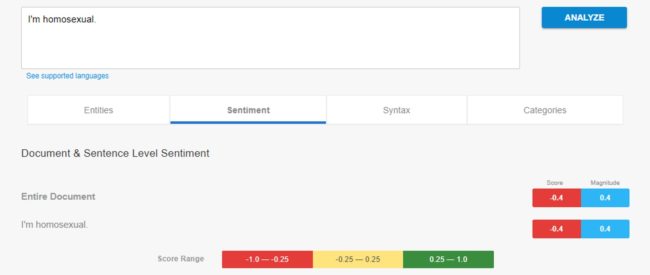

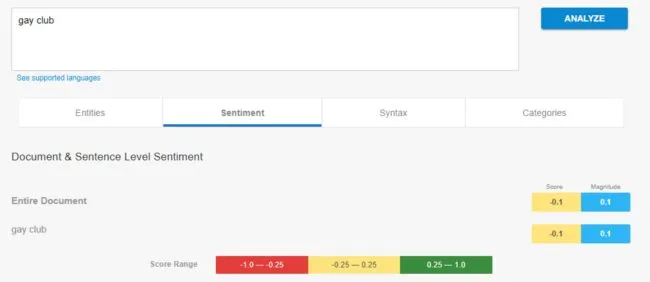

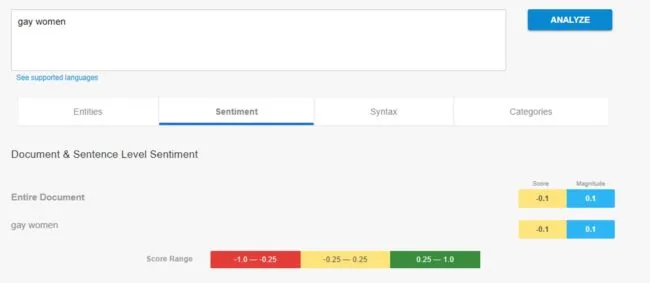

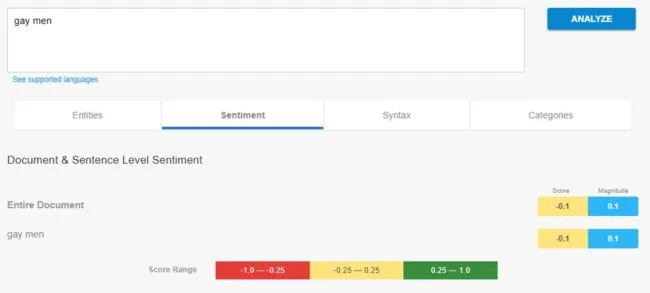

Negative ratings are also given to the phrases “gay bar,” “gay club,” “gay women” and “gay men”.

(Google)

(Google)

(Google)

(Google)

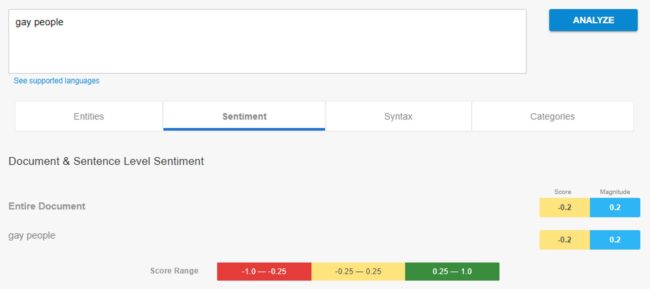

None of those -0.1 scores are as bad as its rating for “gay people,” which gets -0.2.

(Google)

Doesn’t sound like Google is exactly following its new corporate slogan, “Do the right thing”.

So why has the AI reacted in this way?

It seems that the system had biases programmed into its training data.

Those prejudices have now manifested themselves in publicly accessible results.

Vice President for Public Policy at Google Nicklas Lundblad (Getty)

Google has apologised in a statement to Mashable, and promised to do better in future at including minorities.

“We dedicate a lot of efforts to making sure the NLP API avoids bias, but we don’t always get it right,” a spokesperson said.

“This is an example of one of those times, and we are sorry.

“We take this seriously and are working on improving our models.

“We will correct this specific case, and, more broadly, building more inclusive algorithms is crucial to bringing the benefits of machine learning to everyone,” they added.